The Cambridge Social-Decision Making Lab (CSDMLab) has a Misinformation Susceptibility Test (MIST) that purports to test people’s susceptibility to so-called “misinformation.” Researchers at the lab have used the results of the test to portray younger people (millennials and Gen Z) and conservatives, who tend to rely less on mainstream media sources for news, as particularly susceptible to misinformation.

Read the full storyTag: ChatGPT

U.S. Voters Suspect AI Could Impact Their Lives as It Develops According to Poll

New poll data of registered and potential voters reveals a general consensus that artificial intelligence could pose a threat to people as it further develops.

Artificial intelligence, or AI, is technology broadly used to complete tasks, learn information, and enable computers to perform tasks that typically require human intelligence. Recently, AI technology has become more sophisticated and more widely used at an increasing rate.

Read the full storyCommentary: OpenAI and Political Bias in Silicon Valley

AI-powered image generators were back in the news earlier this year, this time for their propensity to create historically inaccurate and ethically questionable imagery. These recent missteps reinforced that, far from being the independent thinking machines of science fiction, AI models merely mimic what they’ve seen on the web, and the heavy hand of their creators artificially steers them toward certain kinds of representations. What can we learn from how OpenAI’s image generator created a series of images about Democratic and Republican causes and voters last December?

OpenAI’s ChatGPT 4 service, with its built-in image generator DALL-E, was asked to create an image representative of the Democratic Party (shown below). Asked to explain the image and its underlying details, ChatGPT explained that the scene is set in a “bustling urban environment [that] symbolizes progress and innovation . . . cities are often seen as hubs of cultural diversity and technological advancement, aligning with the Democratic Party’s focus on forward-thinking policies and modernization.” The image, ChatGPT continued, “features a diverse group of individuals of various ages, ethnicities, and genders. This diversity represents inclusivity and unity, key values of the Democratic Party,” along with the themes of “social justice, civil rights, and addressing climate change.”

Read the full storyNew York Times Sues AI Giants for Alleged Copyright Violation

The New York Times sued artificial intelligence (AI) giants OpenAI and Microsoft on Wednesday for alleged copyright violation.

OpenAI’s chatbot ChatGPT and Microsoft’s Bing Chat are large language models that are trained on data from the internet and generate text based on prompts from users. The tech giants trained these chatbots with millions of the NYT’s copyrighted articles without permission, the outlet alleges in the complaint.

Read the full storySenate Rejects Bill Stripping Section 230 Protections for AI in Landmark Vote

The Senate shot down a bipartisan bill Wednesday aimed at stripping legal liability protections for artificial intelligence (AI) technology.

Republican Missouri Sen. Josh Hawley and Democratic Connecticut Sen. Richard Blumenthal first introduced their No Section 230 Immunity for AI Act in June and Hawley put it up for an unanimous consent vote on Wednesday. The bill would have eliminated Section 230 protections that currently grant tech platforms immunity from liability for the text and visual content their AI produces, enabling Americans to file lawsuits against them.

Read the full storyThe Senate’s ‘No Section 230 Immunity for AI Act’ Would Exclude Artificial Intelligence Developers’ Liability Under Section 230

The Senate could soon take up a bipartisan bill defining the liability protections enjoyed by artificial intelligence-generated content, which could lead to considerable impacts on online speech and the development of AI technology.

Republican Missouri Sen. Josh Hawley and Democratic Connecticut Sen. Richard Blumenthal in June introduced the No Section 230 Immunity for AI Act, which would clarify that liability protections under Section 230 of the Communications Decency Act do not apply to text and visual content created by artificial intelligence. Hawley may attempt to hold a vote on the bill in the coming weeks, his office told the Daily Caller News Foundation.

Read the full storyCIA Creates Its Own Version of ChatGPT to Rival Chinese AI

Amid the rise of artificial intelligence (AI), the Central Intelligence Agency (CIA) has developed its own AI tool in the same vein as ChatGPT, in an effort to combat the Chinese military’s AI advances.

As Just The News reports, the CIA plans to launch its own artificial intelligence platform in order to provide its employees with easier methods of accessing intelligence and information. Specifically, the new technology hopes to allow users to acquire the original source more quickly than before for faster analysis.

Read the full storyCommentary: The Way AI Fits into Broadly Rising Anti-Humanism

The future of humanity is becoming ever less human. The astounding capabilities of ChatGPT and other forms of artificial intelligence have triggered fears about the coming age of machines leaving little place for human creativity or employment. Even the architects of this brave new world are sounding the alarm. Sam Altman, chairman and CEO of OpenAI, which developed ChatGPT, recently warned that artificial intelligence poses an “existential risk” to humanity and warned Congress that artificial intelligence “can go quite wrong.”

Read the full storyPennsylvania House Democrats Want a New Agency to License AI-Created Products

Democratic Pennsylvania lawmakers are drafting several bills to enable regulation of artificial intelligence (AI), including one measure creating a new state agency to oversee the technology.

The new proposals build upon legislation Representative Chris Pielli (D-West Chester) announced last month that would mandate labeling of all AI-generated content. Other parts of the legislative package, which Pielli is cosponsoring alongside Representative Bob Merski (D-Erie), also includes a policy governing the commonwealth’s use of software and devices that perform tasks that were once possible only through human action.

Read the full storyPennsylvania Lawmaker Wants AI-Made Content Labeled

A Pennsylvania lawmaker wants all content generated by artificial intelligence (AI) to be labeled and is drafting legislation to that end.

State Representative Chris Pielli (D-West Chester) insisted consumers should expect to know whether they are accessing human-created or electronically produced information. He said people will have a harder time fulfilling this expectation as AI becomes more advanced and commonly used.

Read the full storyCommentary: The Clear and Present AI Danger

Does artificial intelligence threaten to conquer humanity? In recent months, the question has leaped from the pages of science fiction novels to the forefront of media and government attention. It’s unclear, however, how many of the discussants understand the implication of that leap.

In the public mind, the threat either focuses narrowly on the inherent confusion of ever-better deep fakes and its consequences for the job market, or points in directions that would make a great movie: What if AI systems decide that they’re superior to humans, seize control, and put genocidal plans into practice? That latter focus is obviously the more compelling of the two.

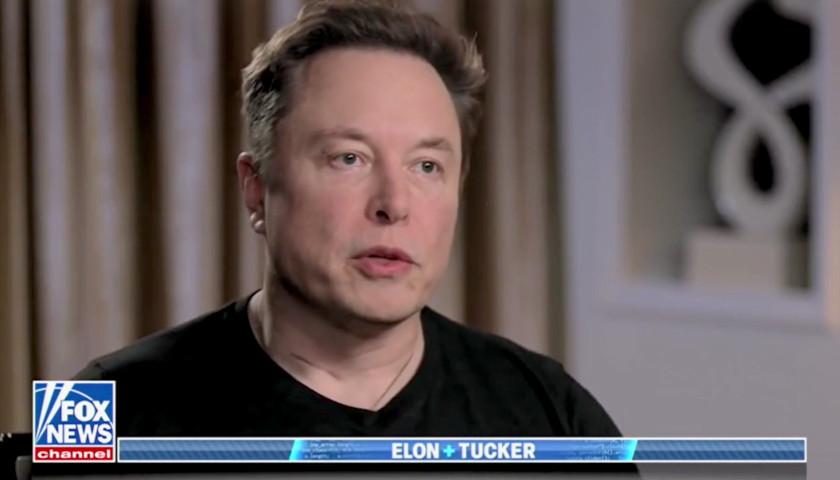

Read the full storyElon Musk Tells Tucker Carlson AI Could ‘Absolutely’ Take Control of Civilization

Elon Musk told Fox News host Tucker Carlson Monday that it was “absolutely” possible for artificial intelligence to take control of civilization and make decisions for people.

“That’s real? It is conceivable that AI could take control and reach a point where you couldn’t turn it off and it would be making the decisions for people?” Carlson, a co-founder of the Daily Caller and Daily Caller News Foundation, asked Musk during an interview that aired Monday.

Read the full storyChina Enters Artificial Intelligence Arms Race, Flops Disastrously

by Jason Cohen China released its main Chat GPT competitor, developed by search engine giant Baidu, Thursday in Beijing, but its debut of the bot was a failure and led to the company’s shares falling, according to CNBC. During the unveiling, the bot named Ernie “summarized a science fiction novel and analyzed a Chinese idiom,” but in the middle of the presentation that Baidu promoted as live, CEO Robin Li, revealed the company prerecorded the presentation for time management purposes, according to The New York Times. Li also made clear that Ernie bot was not flawless and will get better when users provide feedback, according to CNBC. Baidu’s shares then plummeted at least 6.4 percent and as much as 10 percent in Hong Kong, in contrast to a previous rally when the giant announced it had been developing a ChatGPT competitor since 2019, according to the NYT. Baidu said 30,000 corporate clients signed up on the waitlist to access Ernie bot in less than an hour following its announcement, but media and the public did not get access, according to CNBC. Meanwhile, OpenAI announced ChatGPT-4 this week, as the updated version of the AI behind its highly popular and disruptive ChatGPT chatbot that the public has accessed…

Read the full storyCrom’s Crommentary: The Future of AI ‘Should Be of Enormous Concern Because, If You Start with A False Premise, The Likelihood of Coming to a Good Conclusion Is Almost Nil’

Monday morning on The Tennessee Star Report, host Leahy welcomed the original all-star panelist Crom Carmichael to the studio for another edition of Crom’s Crommentary.

Read the full storyHead of A&R for Baste Records Chris Wallin Comments on Whether ChatGPT Could Replace Songwriters

Friday morning on The Tennessee Star Report, host Leahy welcomed the Head of A&R for Baste Records, Chris Wallin in studio to examine new AI called ChatGPT and what effect it could have on the songwriting industry.

Read the full story